AI search promises vs traditional search reality

AI search has been marketed as the silver bullet for discovery: users ask questions in natural language and magically get the perfect answer. In reality, traditional search (based on keywords, filters, and ranking algorithms) still powers most SaaS platforms because it’s predictable, fast, and explainable.

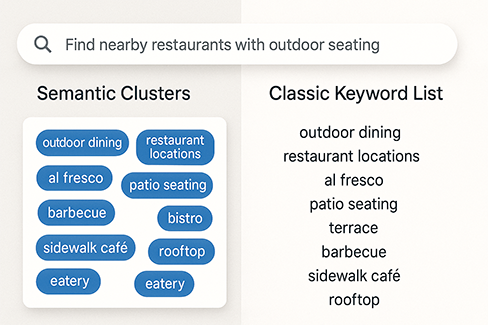

The hype is that AI will replace keyword search entirely. The reality is that AI search augments but doesn’t replace traditional methods. Vector-based semantic retrieval works well for unstructured content, but it often underperforms on highly structured or domain-specific data.

Product leaders should ask: does AI search improve the customer journey measurably, or does it just make a demo look impressive?

Current state of AI search: what actually works

Semantic search success stories and limitations

Works well in practice:

- Technical documentation (e.g., developer portals).

- Long-tail queries where synonyms and rephrasing break keyword search.

- Customer support knowledge bases where “close enough” answers help users.

Limitations:

- Semantic similarity ≠ true relevance. Retrieval sometimes pulls tangential but not actionable results.

- Domain-specific language (e.g., medical or legal terms) can confuse general-purpose embedding models.

- Requires constant evaluation and tuning—not a plug-and-play feature.

Natural language queries: when they work and when they don’t

- Work: Conversational phrasing like “How do I reset my account password?”

- Fail: Highly specific filters like “Show me all invoices above $5,000 from last quarter.” Here, traditional faceted search outperforms AI.

Hybrid approaches (structured filters + semantic search) deliver the best results.

Implementation complexity: resources actually needed

AI search isn’t free:

- Infrastructure: Vector DB (Pinecone, Qdrant, Weaviate, pgvector).

- Embeddings: Ongoing cost for generating/updating document vectors.

- Ops: Monitoring for relevance, latency, and hallucinations.

- Team: Even without ML PhDs, you need developers comfortable with APIs, indexing, and evaluation pipelines.

A simple MVP may cost $200–500/month (API + storage), while enterprise-scale implementations can exceed $10k/month once traffic grows.

User experience: adoption rates and learning curves

Even the best AI search engine fails if users don’t trust it. Observed adoption patterns:

- Users try natural language queries once, but revert to filters if results feel inconsistent.

- Confidence increases when AI results are paired with traditional filters for transparency.

- Visual cues like “These results are AI-powered” can backfire if answers are occasionally wrong.

Lesson: UX design matters as much as the retrieval model.

Performance trade-offs: accuracy vs speed vs cost

- Accuracy: More context (larger embeddings, longer LLM prompts) improves relevance—but costs more tokens.

- Speed: Vector search adds ~100–300ms latency. Adding an LLM for re-ranking can push response times past 1–2s.

- Cost: Storing millions of embeddings and running inference at scale can easily exceed hosting costs of the core SaaS product.

Trade-offs are unavoidable. SaaS teams must decide whether AI search is a core differentiator or a “nice-to-have” feature.

Use cases where AI search adds real value

Knowledge bases and documentation

AI shines here. Users don’t want to navigate endless FAQs—they want an answer. Semantic search plus LLM summarization works well, provided hallucinations are controlled.

E-commerce product discovery

Semantic matching helps with ambiguous queries (“comfortable laptop bag for travel”), but AI must be layered with filters (price, size, color) to avoid irrelevant results.

Enterprise data search

Large orgs with fragmented data (emails, tickets, wikis, reports) benefit from vector search. However, strict governance and compliance checks are required to avoid exposing sensitive data.

Technical challenges: hallucinations, relevance, freshness

- Hallucinations: LLMs can generate plausible but false answers. Mitigation: show source snippets.

- Relevance drift: Models can surface “similar” but useless documents. Requires constant tuning.

- Freshness: Embeddings need to be updated whenever content changes, which adds recurring cost and complexity.

AI search isn’t a “set it and forget it” feature—it requires ongoing ops.

ROI measurement: metrics beyond engagement

Traditional metrics like “search usage” aren’t enough. Instead, measure:

- Task completion rate (did the search actually solve the user’s problem?).

- Deflection rate (how many tickets/support calls avoided).

- Conversion impact (for e-commerce/product discovery).

Without tying AI search to business KPIs, ROI is guesswork.

Pragmatic roadmap: when and how to implement

- Start small: Add semantic search to one domain (docs, FAQs).

- Hybrid approach: Keep keyword + filters alongside AI results.

- Monitor: Track precision, latency, user trust signals.

- Iterate: Adjust chunking, embeddings, and ranking models.

- Scale: Only expand to full platform search once ROI is clear.

Practical takeaway: AI search is a layer, not a wholesale replacement.

The hype around AI-powered search promises instant answers to every query. The reality: it’s powerful but situational. Semantic retrieval works for knowledge-heavy, unstructured data, but underdelivers for structured queries. Costs, latency, and adoption hurdles mean product teams must evaluate ROI carefully.

For SaaS companies, the winning strategy is hybrid: combine semantic search for meaning with traditional search for precision.

FAQs

Is AI search always better than keyword search?

No. It excels in unstructured content, but keyword search is faster and more reliable for structured filters.

How much does AI search cost?

From a few hundred dollars/month (small dataset) to thousands at enterprise scale.

Can I implement AI search without an ML team?

Yes. APIs and vector DBs make it accessible, but you need developers for integration and monitoring.