Why backups fail: real disaster recovery stories

Backups are worthless if they don’t restore. Yet in small teams, this scenario is common: a startup diligently runs nightly database dumps, only to discover weeks later that the dumps are corrupted, incomplete, or missing critical configuration files.

Real-world failures include:

- Unmonitored cron jobs: Backups stopped working months ago, but no one noticed until production went down.

- Single-point backups: A small business stored everything on one external hard drive, which failed after a power surge.

- Version mismatch: Database dumps couldn’t be restored on updated server versions, leaving critical data inaccessible.

- Partial coverage: Teams backed up databases but forgot about environment variables, SSL keys, or user-uploaded content.

For small teams, the biggest risks are assumptions (“we have backups, so we’re safe”) and lack of restoration tests.

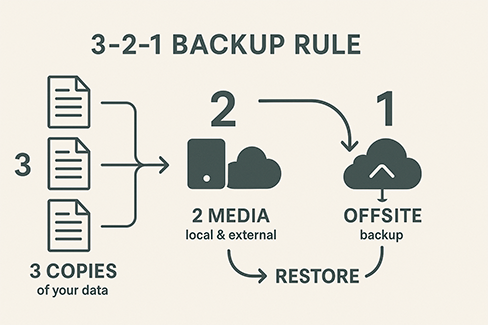

Fundamental principles: 3-2-1 rule adapted for small teams

The 3-2-1 backup rule is a timeless principle:

- 3 copies of data

- 2 different storage types

- 1 offsite location

For teams of 2–10 people, a practical adaptation looks like this:

- Primary: Production database + application server.

- Secondary: Automated cloud snapshot (AWS EBS, DigitalOcean Volume Snapshot, Linode Backup).

- Tertiary: Offsite copy (e.g., Backblaze B2, Wasabi, or S3 with lifecycle policies).

Small teams can’t afford enterprise-grade disaster recovery, but with automation and cheap cloud storage, the 3-2-1 rule is achievable without breaking budgets.

Backup strategy by data type

Databases: dumps vs snapshots vs replication

- Dumps:

mysqldump,pg_dump→ Simple, portable, but can be slow for large datasets. - Snapshots: Cloud block storage snapshots → Fast and reliable, but vendor-dependent.

- Replication: Continuous sync to a secondary DB → Great for zero downtime but more complex.

Checklist:

- For small DBs (<5GB), nightly dumps + weekly snapshots.

- For growing DBs (>5GB), weekly dumps, daily snapshots, and consider replication.

Application files and configurations

- Use version control (Git) for code and configuration.

- Back up

.envfiles, SSL keys, and third-party API credentials separately. - Automate packaging configs into tarballs (

tar -czf app-config.tar.gz /etc/myapp).

User data and file uploads

- Store uploads in object storage (S3, B2, R2) with lifecycle rules.

- Enable versioning to recover deleted or overwritten files.

- Sync with

rcloneor vendor-native tools weekly.

Automated tools for 2-10 person teams

Cloud-native backup solutions

- AWS Backup: Centralized, policy-driven snapshots.

- DigitalOcean Backups: Automatic weekly snapshots at $1–2/month.

- Linode Backup Service: $2/month per server, covers daily + weekly retention.

Best for teams that want “set and forget” coverage.

Reliable open-source scripts and tools

- Duplicati: Cross-platform, encrypts and uploads backups to multiple cloud providers.

- Restic: Lightweight, efficient, supports incremental backups.

- BorgBackup: Deduplication powerhouse, ideal for large datasets.

- Automated scripts with

cron+rclone: Cheap, flexible option for engineers.

Example script:

#!/bin/bash

DATE=$(date +%F)

mysqldump -u root -p$MYSQL_PASS mydb > /backups/mydb-$DATE.sql

tar -czf /backups/app-$DATE.tar.gz /var/www/myapp

rclone sync /backups remote:team-backups --progress

Restoration testing: weekly/monthly protocols

Backups without tests = false security.

- Weekly test: Restore a database dump to a staging server.

- Monthly test: Spin up a fresh VM, deploy app + configs + DB from backups.

- Quarterly drill: Simulate a disaster (server wipe) and measure recovery time.

Document RTO (Recovery Time Objective) and RPO (Recovery Point Objective) targets.

Recovery documentation: simple playbooks

Every team should have a 1-page playbook stored in a shared drive (and printed). It should include:

- Backup storage locations and credentials.

- Step-by-step restore procedures.

- Emergency contact list.

Keep it non-technical-friendly so even a project manager can follow steps.

Backup monitoring: alerts that actually work

- Monitor with health checks (e.g., Healthchecks.io, Cronitor).

- Set up Slack/Email alerts for failed backup jobs.

- Verify file size and checksum after each run.

Backups smaller than expected often mean silent corruption.

Special cases: zero downtime and partial failures

- Zero downtime: Use replication + hot-standby DBs for mission-critical SaaS.

- Partial failures: Recover only lost components (e.g., file uploads) using object storage versioning.

- Combine snapshots (fast rollback) with granular dumps (specific record recovery).

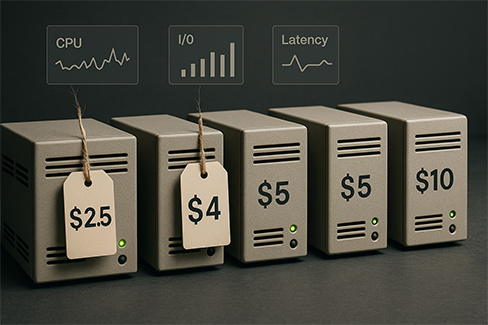

Real budget planning for backup infrastructure

Approximate monthly costs for small teams:

- Databases: $5–20 (snapshots or S3 storage).

- App + configs: $2–10 (tarballs synced via B2/Wasabi).

- User uploads: $5–15 per TB stored.

- Monitoring + alerts: free (open-source) to $10 (SaaS tools).

Total: $15–50/month for a robust backup system.

For small teams, backups must be simple, automated, and regularly tested. The 3-2-1 rule still applies, but lightweight tools like Restic or cloud-native snapshots make it achievable on tight budgets. Restoration testing is the difference between theory and survival. A written playbook and basic monitoring ensure your backups actually protect your business when disaster strikes.

FAQs

What’s the most common reason backups fail?

Lack of testing. Teams assume backups work but never verify restores.

Is object storage better than local drives for backups?

Yes, because it provides durability, redundancy, and offsite coverage.

How often should small teams test restores?

At least weekly for DBs, monthly for full application restores.