Edge computing explained: use cases beyond the hype

Edge computing brings computation closer to users by running code in globally distributed data centers instead of a single origin server. While it’s often hyped as a universal solution, its strongest applications are specific:

- Low-latency personalization (e.g., region-based content, A/B testing).

- Security and compliance (e.g., enforcing GDPR rules by region).

- Traffic shaping (e.g., caching APIs, authentication middleware).

- Reducing origin load (e.g., proxying APIs or transforming requests).

Unlike traditional server-side apps, edge functions trade deep compute power for speed and proximity. Developers must design with lightweight tasks in mind.

Cloudflare Workers: the mature pioneer

Launched in 2017, Cloudflare Workers was the first mainstream edge functions platform. It runs on V8 isolates, the same JavaScript engine powering Chrome, enabling sub-millisecond startup times.

V8 runtime, available APIs, limitations

- Runtime: Lightweight V8 isolates (not containers).

- Languages: JavaScript, TypeScript, Rust (via WASM).

- APIs: Fetch, Streams, KV store, Durable Objects, R2 storage.

- Limitations:

- CPU time limited to ~50ms per request (paid plan can extend).

- No full Node.js environment (e.g., no native fs module).

- Debugging locally can feel different from production.

Example Worker:

export default {

async fetch(request) {

return new Response("Hello from Cloudflare Worker!");

}

}

Pricing model and when it gets expensive

- Free tier: 100k requests/day.

- Paid: $0.50 per million requests + $0.15/GB egress.

- Gotcha: Costs rise sharply when using Durable Objects or storing large data. For high-volume APIs with stateful logic, costs can outpace traditional serverless.

Vercel Edge Functions: perfect Next.js integration

Vercel Edge Functions launched later but built deep synergy with Next.js. For teams already on Vercel, integration is seamless—middleware and API routes can run at the edge with minimal config.

Developer experience vs vendor lock-in

- Pros:

- Write Next.js middleware → auto-deployed as Edge Function.

- Great DX: local testing, TypeScript support, fast deployments.

- Cons:

- Vendor lock-in: strongly tied to Vercel platform. Migrating away requires rewriting routes.

- Less flexible runtime compared to Cloudflare’s ecosystem.

Runtime limitations and cold starts

- Runtime: Web standard APIs, but no Node.js core modules.

- Cold starts: Usually <1ms thanks to isolates, but less predictable under heavy loads than Workers.

- Limitations: Lower execution timeout (50ms per function), limited environment variables handling.

Technical comparison: performance, limits, debugging

| Feature | Cloudflare Workers | Vercel Edge Functions |

|---|---|---|

| Runtime | V8 isolates (multi-language via WASM) | V8 isolates, Next.js focused |

| Startup time | <1ms (cold starts negligible) | <1–5ms, low but variable |

| Execution time limit | ~50ms CPU per request | ~50ms per function |

| Node.js compatibility | Partial (no fs, net) | Partial, restricted APIs |

| Debugging tools | Wrangler CLI, Cloudflare Dashboard | Vercel CLI, browser-like DX |

| Storage integrations | KV, Durable Objects, R2 | Edge Config, Vercel KV (beta) |

In real benchmarks, Cloudflare edges out in raw global latency, while Vercel offers a smoother experience for Next.js developers.

Ideal use cases for each platform

A/B testing, auth middleware, API proxying

- Cloudflare Workers: API proxying, caching, security headers, lightweight API transforms.

- Vercel Edge: A/B testing and middleware directly tied to Next.js apps.

Geo-routing and regional compliance

- Cloudflare: Stronger global presence (~300 locations). Ideal for GDPR compliance or country-based routing.

- Vercel Edge: Good for region-specific features but fewer PoPs than Cloudflare.

Developer experience: deployment, monitoring, debugging

- Cloudflare Workers: Use Wrangler CLI, test with Miniflare locally, push to 300+ PoPs. Monitoring via Workers Analytics + third-party APM.

- Vercel Edge: Integrated into Vercel dashboard, local dev with

vercel dev. Superior for Next.js apps, but less generic flexibility.

For debugging complex APIs, Workers offer Durable Objects logs, while Vercel logs are more tied to request flow in Next.js routes.

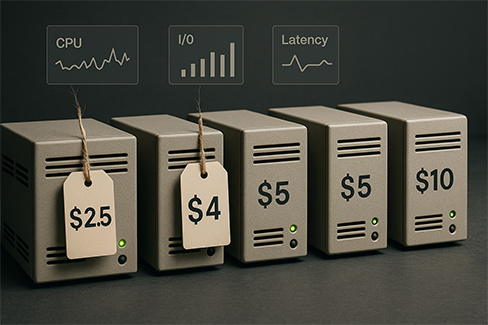

Real production costs: examples with actual traffic

Scenario: 50 million requests/month, 100GB egress

- Cloudflare Workers:

- Requests: $25 (50M × $0.50/M)

- Egress: $15 (100GB × $0.15)

- Total: $40

- Vercel Edge:

- Included in Pro plan (starts $20/user). Beyond quota, pricing scales.

- Egress via Vercel’s bandwidth pricing (~$40 for 100GB).

- Total: ~$60+ depending on team size.

For high-traffic APIs, Workers are more cost-efficient. For Next.js sites, Vercel may offset cost with saved developer hours.

When to use edge functions vs traditional server-side

Use edge functions when:

- You need global distribution and <50ms latency.

- Workloads are lightweight (auth, routing, headers, caching).

- Compliance requires regional execution.

Stick with traditional server-side/serverless when:

- You need heavy compute (video processing, AI inference).

- Execution exceeds 50–100ms regularly.

- Full Node.js APIs are required.

Conclusion

- Cloudflare Workers: More mature, cost-effective at scale, excellent for generic APIs, security, and geo-routing.

- Vercel Edge Functions: Best for Next.js developers, unbeatable DX, but comes with vendor lock-in and higher costs.

Edge functions aren’t a replacement for servers—they’re a complement. The best architecture often mixes edge for routing/auth + serverless for heavy lifting.

FAQs

Are Cloudflare Workers faster than Vercel Edge?

Globally, yes. Workers have broader PoP coverage and lower average latency.

Can I run Node.js libraries on Edge?

Not fully—both platforms use web APIs, not full Node.js environments.

Which is cheaper?

At scale, Cloudflare Workers. For small Next.js apps, Vercel’s Pro plan may be simpler.