RAG explained without jargon: what problem it actually solves

Retrieval-Augmented Generation (RAG) is a way to make AI models more accurate by letting them “look things up” before answering. Instead of forcing an LLM to memorize everything, RAG connects it to an external knowledge base.

The problem it solves: hallucinations and outdated answers. If you ask an LLM about your company’s policies, it won’t know unless those documents are part of its training. With RAG, you can feed it your docs and let it retrieve the right context before generating a response.

Think of it as: search engine + AI writer combined. The search pulls relevant snippets, and the AI turns them into a natural answer.

When RAG is the solution (and when it’s not)

Ideal use cases vs over-engineering

Good RAG use cases:

- Internal knowledge bases (HR docs, engineering manuals).

- Customer support bots that need real policies.

- SaaS apps offering AI-powered search over user-generated data.

- Research workflows where precision matters.

Over-engineering happens when:

- Your dataset is tiny (a few pages → a normal prompt works).

- You just need structured lookup (like SQL queries).

- Latency is critical and an external search step slows responses.

Simpler alternatives to consider first

Before jumping into RAG, consider:

- Prompt engineering with few-shot examples.

- Fine-tuned LLMs for narrow tasks.

- Embedding search via a single API call (no full pipeline).

RAG shines when your knowledge base is dynamic, large, or private.

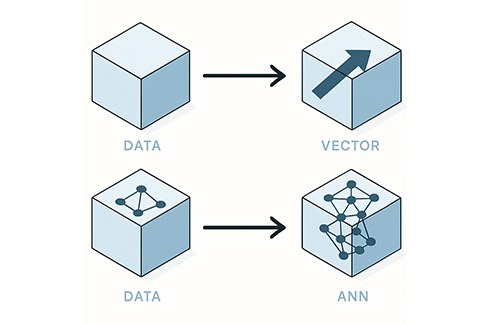

Basic concepts: embeddings and similarity search

Vector representations explained simply

Embeddings turn text into vectors (long lists of numbers). Similar texts → similar vectors.

Example:

- “How to reset password?” →

[0.12, 0.44, -0.98, …] - “Password reset guide” →

[0.11, 0.46, -0.97, …]

These vectors are close in mathematical space, so a search engine can find them even if the wording differs.

Why it works better than keyword search

- Keyword search: matches exact words (“reset” vs “recover” might fail).

- Vector search: captures meaning (“reset password” ≈ “forgot credentials”).

This semantic understanding makes RAG effective for user questions phrased in many ways.

Minimum viable technical stack for RAG

APIs vs self-hosted: real trade-offs

- APIs:

- Examples: OpenAI embeddings, Pinecone, Weaviate Cloud.

- Easy to set up, managed infra, faster iteration.

- Downsides: recurring costs, vendor lock-in.

- Self-hosted:

- Examples: pgvector (PostgreSQL extension), Milvus, Qdrant.

- More control, often cheaper at scale.

- Downsides: ops overhead, DevOps team required.

For small teams: start with API-based services, migrate to self-hosted once scale and budget justify.

Vector databases vs PostgreSQL extensions

- Vector DBs (Pinecone, Qdrant, Weaviate): Built for high-performance similarity search, with indexing, filters, and scaling baked in.

- Postgres + pgvector: Good compromise for teams already on Postgres. Simple, low-cost, but less optimized for very large datasets.

Step-by-step implementation with code

Chunking strategies for different content types

You rarely embed entire documents. Instead, split into chunks:

- Long docs: split by sections (500–1000 tokens).

- Code: split by function/class.

- Transcripts: split by speaker turns or time intervals.

Bad chunking leads to irrelevant results or missing context.

Embedding models: OpenAI vs open-source

- OpenAI (

text-embedding-3-small): High quality, easy to use, cheap ($0.00002/1k tokens). - Open-source (e.g., SentenceTransformers, InstructorXL): No per-call costs, full control, but need hosting.

Python example with OpenAI + pgvector:

import openai, psycopg2

from openai import OpenAI

client = OpenAI(api_key="YOUR_KEY")

text = "How to reset a password?"

embedding = client.embeddings.create(

model="text-embedding-3-small",

input=text

).data[0].embedding

conn = psycopg2.connect("dbname=mydb user=me password=secret")

cur = conn.cursor()

cur.execute("INSERT INTO documents (content, embedding) VALUES (%s, %s)", (text, embedding))

conn.commit()

Evaluation and iterative improvement

Test your RAG pipeline with real queries from users. Check:

- Precision: Does the answer use correct snippets?

- Coverage: Are important docs being retrieved?

- Latency: Is it fast enough for production?

Iterate by improving chunking, embeddings, or retrieval filters.

Operational costs and scaling considerations

- Embeddings: $0.02–$0.10 per million tokens (API). Costs grow with dataset size.

- Storage: Vector DBs charge per GB + request volume.

- Latency: API-based retrieval adds 100–300ms to response times.

At scale (millions of docs, high QPS), costs shift from LLM calls to storage + retrieval infrastructure.

Common pitfalls and how to avoid them

- Indexing garbage: Don’t embed duplicate or irrelevant docs. Quality matters more than volume.

- Over-chunking: Too small = no context. Too big = irrelevant matches.

- Ignoring monitoring: Track retrieval precision and errors in production.

- Relying only on embeddings: Sometimes keyword filters + vectors combined work best.

Conclusion

RAG makes LLMs practical for real business apps, but it’s not a silver bullet. For non-ML teams, the path is clear: start small with APIs, learn through real queries, and only scale to self-hosted solutions once usage grows. Done right, RAG can turn a generic LLM into a domain expert powered by your own data.

FAQs

Is RAG better than fine-tuning?

They solve different problems: RAG adds fresh external data; fine-tuning changes the model’s behavior. Often they’re combined.

Do I need a vector database to start?

No. Postgres with pgvector or even a JSON store + cosine similarity can work for small datasets.

What’s the biggest hidden cost?

Storage and retrieval latency at scale. Embedding APIs are cheap, but querying large datasets adds infra costs.